CameraView, Offscreen (headless), and Realistic Rendering

The view is realized using very basic OpenGL rendering. It is straight-forward to grab the image (and, e.g., make a video from such images), as well as the depth (e.g. to simulate a depth camera).

However, grabbing from the actual view window is brittle, as it depends on the window manager and might give weird results if the window is not visible. The package provides an offscreen (headless) implementation of the OpenGL rendering, which allows to compute images and depth (also object segmentation labels) without window or X.

The simple OpenGL rendering is ok for depth camera simulation, but not visually realistic. An interface to Nvidia’s NVISSI physics-based rendering enables very flexible realistic rendering.

Grabbing images and depth from the view window

The view is internally implemented using glfw and most basic opengl. It’s simple to grab the rgb and depth buffers.

[1]:

from robotic import ry

import numpy as np

import matplotlib.pyplot as plt

print(ry.__version__, ry.compiled())

0.0.23 compile time: Oct 20 2023 14:36:37

[2]:

C = ry.Config()

C.addFile(ry.raiPath('scenarios/pandaSingle.g'))

C.view()

[2]:

0

[3]:

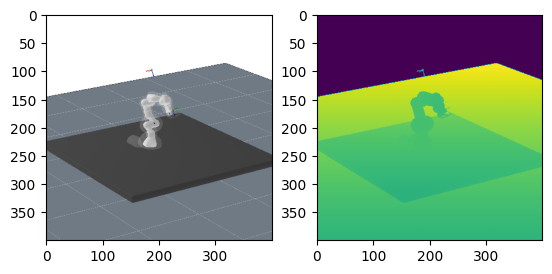

rgb = C.view_getRgb()

depth = C.view_getDepth()

print(rgb.shape, depth.shape)

(400, 400, 3) (400, 400)

[4]:

fig = plt.figure()

fig.add_subplot(1,2,1)

plt.imshow(rgb)

fig.add_subplot(1,2,2)

plt.imshow(depth)

plt.show()

The camera pose can be set to be at a particular frame. (Create a dedicated frame, if you want freedom to set the camera). Also camera intrinsics (focalLength, width, height) can be set with frame attributes. Our scene has a cameraTop predefined:

[5]:

f = C.getFrame('cameraTop')

f.setAttribute('focalLength', .5) # wide angle

f.setAttribute('width', 200) # super wide angle

f.setAttribute('height', 200) # super wide angle

C.view_setCamera(f)

Saving pngs for video generation

There might be many better ways to generate a video. But a simple one it to write many numered pngs and call ffmpeg on shell. For instance:

[6]:

# first ensure a folder

import os

os.system('mkdir -p z.vid')

[6]:

0

[7]:

q = C.getJointState()

for t in range(30):

q = q + .02

C.setJointState(q)

C.view()

C.view_savePng('z.vid/')

[8]:

os.system('ffmpeg -v 16 -f image2 -framerate 12 -i z.vid/%04d.png -c:v libx264 -r 25 -pix_fmt yuv420p -y vid.mp4')

[8]:

0

…which creates an mp4 with 12 images per second.

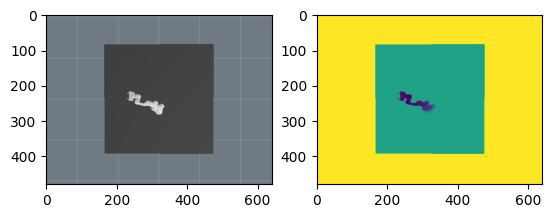

Offscreen (headless) CameraView

But this way of grabbing images and depth from the view window is brittle (as it depends on the window manager) and also includes markers and non-visual objects. A much better way is to use offscreen method:

[9]:

cam = ry.CameraView(C)

[10]:

rgb, depth = cam.computeImageAndDepth(C)

[11]:

fig = plt.figure()

fig.add_subplot(1,2,1)

plt.imshow(rgb)

fig.add_subplot(1,2,2)

plt.imshow(depth)

plt.show()

[12]:

cam.setCamera('cameraTop') # cameraWrist is another pre-defined frame

[12]:

<robotic.ry.CameraViewSensor at 0x7fb86c52d8b0>

[13]:

rgb, depth = cam.computeImageAndDepth(C)

[14]:

fig = plt.figure()

fig.add_subplot(1,2,1)

plt.imshow(rgb)

fig.add_subplot(1,2,2)

plt.imshow(depth)

plt.show()

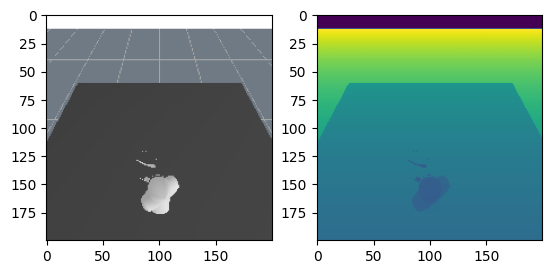

Point Cloud

[15]:

from robotic import ry

import matplotlib.pyplot as plt

[16]:

C = ry.Config()

C.addFile(ry.raiPath('scenarios/pandaSingle.g'))

C.view()

[16]:

0

[17]:

cam = ry.CameraView(C)

cam.setCamera('cameraTop')

rgb, depth = cam.computeImageAndDepth(C)

pcl = ry.depthImage2PointCloud(depth, cam.getFxyCxy())

[18]:

print(rgb.shape, depth.shape, pcl.shape)

print(C.view_fxycxy())

(360, 640, 3) (360, 640) (360, 640, 3)

[1881.85195923 1881.85195923 200. 200. ]

[19]:

fig = plt.figure()

fig.add_subplot(1,2,1)

plt.imshow(rgb)

fig.add_subplot(1,2,2)

plt.imshow(depth)

plt.show()

[20]:

C.delFrame('pcl2')

f = C.addFrame('pcl2', 'cameraTop')

f.setPointCloud(pcl, [255,0,0])

C.view()

-- WARNING:kin.cpp:getFrame:392(-1) cannot find frame named 'pcl2'

[20]:

0

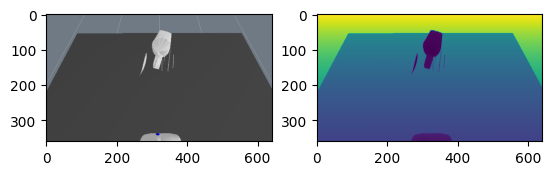

Physics-based Rendering using Nvidia NVISII

[21]:

from robotic import ry

from matplotlib import pyplot as plt

from robotic import render

print('version', ry.compiled())

---------------------------------------------------------------------------

ImportError Traceback (most recent call last)

/tmp/ipykernel_90908/164390504.py in ?()

----> 3 from robotic import ry

4 from matplotlib import pyplot as plt

5 from robotic import render

6 print('version', ry.compiled())

~/.local/lib/python3.8/site-packages/robotic/render.py in ?()

1 from robotic import ry

2 import numpy as np

----> 3 import nvisii

4

5 def quatMult(b, c):

6 a = b.copy();

~/.local/lib/python3.8/site-packages/nvisii/__init__.py in ?()

49

50

51

52

---> 53 from sys import version_info as _swig_python_version_info

54 if _swig_python_version_info < (2, 7, 0):

55 raise RuntimeError("Python 2.7 or later required")

56

ImportError: libcuda.so.1: cannot open shared object file: No such file or directory

[22]:

C = ry.Config()

C.addFile(ry.raiPath('scenarios/pandaSingle.g'))

C.view()

[22]:

0

[23]:

C.addFrame('light1') .setPosition([1,0,2]) .setShape(ry.ST.ssBox, [.5,2,.1,.02]) .setColor([.5]) .setAttribute('temperature', 6000)

C.addFrame('light2') .setPosition([-1,0,2]) .setShape(ry.ST.ssBox, [.5,2,.1,.02]) .setColor([.8]) .setAttribute('temperature', 6000)

C.view()

[23]:

0

[24]:

nv = render.NvisiiRenderer(600, 400, C.view_focalLength())

nv.addConfig(C)

---------------------------------------------------------------------------

NameError Traceback (most recent call last)

Cell In[24], line 1

----> 1 nv = render.NvisiiRenderer(600, 400, C.view_focalLength())

2 nv.addConfig(C)

NameError: name 'render' is not defined

[25]:

nv.setCamera(C)

rgb = nv.render(256)

plt.imshow(rgb)

plt.show()

---------------------------------------------------------------------------

NameError Traceback (most recent call last)

Cell In[25], line 1

----> 1 nv.setCamera(C)

2 rgb = nv.render(256)

3 plt.imshow(rgb)

NameError: name 'nv' is not defined

[26]:

del nv

---------------------------------------------------------------------------

NameError Traceback (most recent call last)

Cell In[26], line 1

----> 1 del nv

NameError: name 'nv' is not defined

[ ]: